This is a very important article and I explained Complete End-to-End SEO Audit Guidelines based on Google’s Latest updates till December 2022 in this article, You will learn beginner to advanced SEO Audit processes with practice real project examples that you can apply to any type of website. Before going to start first we understand:

SECTION # 1 – OVERVIEW AND BASICS

What is SEO Audit?

An SEO audit is a process of evaluating how well your web presence relates to best practices and seeing how the search engine optimizes and friendly the site is. The purpose of the audit is to identify as many foundational issues affecting organic search performance as possible and the main goal of an SEO audit is to help you optimize websites or identify the problems so that it can achieve higher ranking.

SEO Audit -the Basics.

How Google Works?

To understand how SEO audits work, we need to take one step back and understand how Google works at a core level. So for those who don’t know, Google has a crawler that you could call a spider.

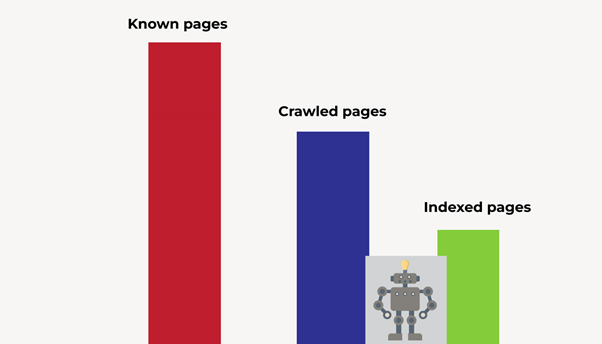

And what it does is it goes out there across the whole wide web and it finds web pages, which of course there are trillions and trillions of different web pages out there. Now, once it finds those web pages, it separates them into three different categories, which are; known pages, crawled pages, and indexed pages.

Known pages are pages that Google knows about, kind of self-explanatory. Crawled pages are pages that Google spider has actually gone onto and crawled that page. So it’s got all the information about that page and all the code details. Index pages are the pages that Google has crawled and decided to add to the index. AKA, allowing users to find them On Google Search results.

What happens on top of this is that Google’s core algorithm determines a ranking for the webpage to determine where that page is going to rank once that page has been indexed. But that’s essentially how Google works at a really basic level, they find pages, they crawl pages, and then they add the good ones to the index. So, any problems you have on your website, such as poor optimization of on-page SEO, and slow website load-in time, will all be found by Google Spiders when they crawl your website. And it’s going to have a big impact on where your website ranks. Therefore it’s super, super important to make sure you carry out an SEO audit literally from day one. Whether that’s on your site or a client site, you need to carry out an audit from day one.

So, now we’ve gone over the basics of what an SEO audit is and how Google works Now here I am sharing the Categories of SEO Problems along with the SEO Audit Checklist that can help you understand what steps need to follow and how SEO audit work.

SEO Audits – The 4 Categories

1). Technical Problems

- XML Sitemaps

- Load Times

- Http v Https

2). Onpage SEO Problems

- Missing page Titles

- Missing meta tags

- Duplicate Content

3). Offpage SEO Problems

- Spammy Links

- Backlink Profile

- Etc

4). General Problems

- Analyzing organic traffic

- Reviewing “low-hanging fruit”

Here I am going to share SEO Audit Checklist according to the Four Categorization of SEO Problems so that you can focus and plan tasks accordingly.

SEO Audit Checklist:

1). Technical Problems

- CHECK FOR MULTIPLE VERSIONS OF THE SITE:

- CHECK THE XML SITEMAP

- CHECK THE WEBSITES PAGE SPEED

- CHECK IF THE SITE IS MOBILE FRIENDLY

- CHECK FOR 404 PAGES + OPTIMIZED URLS

2). On-page SEO Problems

- CHECK THE PAGE TITLES

- CHECK THE META DESCRIPTION

- CHECK THE HEADING TAGS (H1, H2, H3s, H4s…)

- CHECK FOR DUPLICATE CONTENT

- CHECK FOR THIN CONTENT

- CHECK FOR BROKEN LINKS

- CHECK THE SITE INTERNAL LINK STRUCTURE

3). Off-page SEO Problems

- CHECK THE SITE BACKLINK PROFILE

- CHECK THE SITE ORGANIC TRAFFIC

4). General Problems

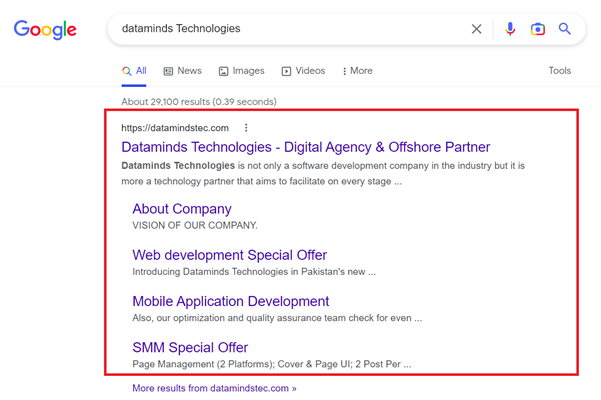

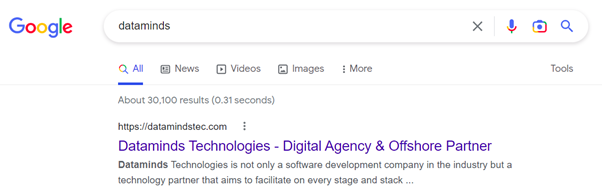

- CHECK THE SITE RANKS FOR ITS BRAND NAME

- CHECK GOOGLE ANALYTICS TRAFFIC

- CHECK GOOGLE WEBMASTER ERRORS & INDEXING ISSUES.

What tools can you use for an SEO audit?

This is a question I get asked literally every week and I don’t blame the reason why people ask us, is because literally if you search online for tools to use for an SEO audit, the information is so conflicting, it can be so overwhelming, and literally, all it does is end up confusing you. In this blog, I’m going to demystify the whole SEO audit tool problem, and I’ll let you know exactly what tool we use in our agency Dataminds Technologies and along with what is recommended for you.

There are 3 biggest tools you’ve probably seen online when you’ve carried out any search for an SEO audit tool probably DeepCrawl,

1). DEEPCRAWL (Deepcrawl is now Lumar and you can access Click here)

you’ve probably seen Sitebulb,

2). Siteblub (you can access Click here)

and the other one you’ve probably seen, the most common, is going to be Screaming Frog.

3). Screemingfrog (you can access Click here)

So DeepCrawl and Sitebulb they’re actually both paid tools. They do work very well. However, I don’t actually find them to be any more effective or any more useful than Screaming Frog, and Screaming Frog is actually free to use. Screaming Frog is a tool we use ourselves at our SEO Agency Dataminds Technologies. We highly recommend Screaming Frog over any other SEO audit tool out there. Number one is because it’s free and it literally does everything these other two tools do as well. If your website has less than 500 pages, which I assume a lot of people who are reading the blog have Business Websites or Small & Medium E-Commerce Websites will actually have less than 500 pages, you can use Screaming Frog for absolutely free. If you do have a website that is more than 500 pages, then you will, unfortunately, have to pay for the paid version, which I believe is ₤185 for an annual license, which is still really, really good value. It’s easy to use and 100% free and it’s a very comprehensive tool for SEO audit, especially for Technical SEO and On-Page SEO and as well as general SEO issues which help you in Website speed, optimization, and ranking fast.

So once you go over to Screaming Frog, which I’m going to share the link to click download, there is a desktop version and easy-to-use.

If you scroll down, we can have a look at all the features of Screaming Frog. So, you can see when you use this tool, you can easily find broken links. So any broken links you have on your website. You can analyze page titles and meta descriptions. You can even generate XML sitemaps. You can crawl any website, it’s not just WordPress websites, so you can crawl JavaScript websites as well. Shopify, WordPress, Magento store, Custom sites, Wix websites, and Weebly, e-commerce stores, it doesn’t really matter, you can crawl literally any website. You can find any redirects your website has, so that could be a 301 redirect. You can discover duplicate content. If you have paid version of Screaming Frog, you can also integrate it with Google Analytics, Google Search Console, and PageSpeed Insights. Now, these three things aren’t really necessary whatsoever. It’s kind of a little feature that is nice to have, but you don’t need it at all to carry out an SEO audit. So again, it’s not even essential. And one thing which I really liked about Screaming Frog, which it added not too long ago, is that they now allow seeing the website from a visual site architect perspective. If that doesn’t make any sense right now, then don’t worry. I’m going to give you an example of what I mean by a visual site architect in my next section. So Screaming Frog is a tool that I recommend. So, as I said, I will go ahead and add a link in the resources to this URL right here. And simply once you land on this URL, what you want to do is go ahead and download it. And yes, it works on all computers. It works on Windows, Macs, and Linux machines, so you shouldn’t have any issues using it whatsoever. So go ahead and download Screaming Frog and I’ll see you in the next sections, where I will be going to give you a really brief overview of exactly all the information it gives you and exactly how it works.

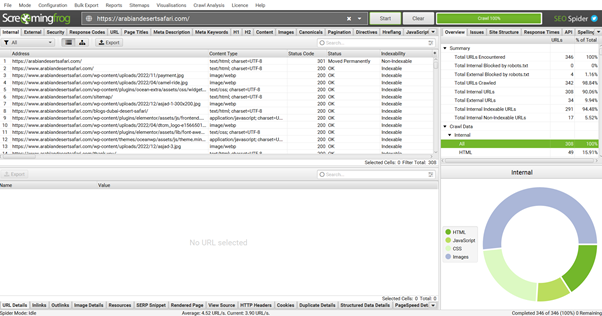

Now I’m going to show you how you can carry out your first crawl and I’ll be giving you a sneak preview and showing you exactly all the information that Screaming Frog returns when you actually crawl a website. So once you’ve got Screaming Frog downloaded, and you’ve opened it up for the first time, it’s going to look pretty much similar to this.

How do you crawl a website? Well, it’s very easy. All you need to do is enter your website URL into the top bar right here, and then go ahead and click start. So for the purpose of explaining or as an example, I will be doing is using the website Top Desert Safari Dubai

I do not own this website. As an example I am using it as this type of website is most focused on SEO because there is a lot of volume and competition in niche Tourism in Dubai-related keywords like Desert Safari Dubai, Dubai Desert Safari, and Desert Safari in Dubai.

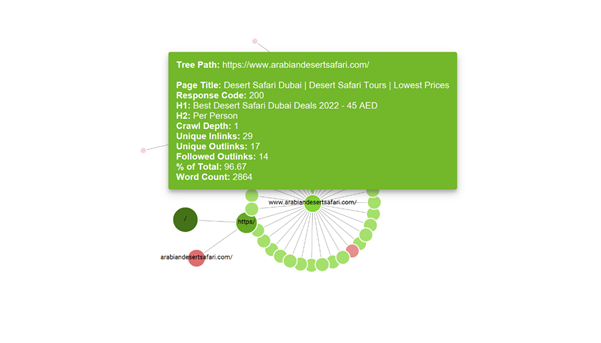

I thought it would be a good fit for the purpose to explain SEO Audit and we do some practice tips on this website Arabian Desert Safari Dubai, I’ll actually show you exactly how you can carry out an SEO audit step by step. So let’s take this website and now I’ll go back into Screaming Frog. I’m going to paste it into the URL at the top. And once I go ahead and click start, what’s going to happen is Screaming Frog is going to visit this website and start to crawl all of the pages and discover every single page on the website. It’s going to crawl them one by one. You can see in total, it’s found 346 pages on the website, and it’s already crawled 342 of those.

So as you can see, the tool works extremely fast. Of course, if you have a bigger website, it’s going to take a little bit longer to crawl. So once the website crawl has finished, you can see we have tons of information in front of us. We have the address which is going to be the website URL. We can see the content, the status code, and the indexability. So if any pages aren’t getting indexed, you’ll know exactly how to find them very quickly. You can keep on scrolling and getting tons of information. We can see the page titles for all of those pages. We can see the page title length. You can see the meta description. We can see what pages don’t have meta descriptions. We can keep on scrolling and see the meta description length like 157, 27, 148, 126…. We don’t just stop there. We get more information. We can see the h1 tags. We can see another h1 tag. So if pages have multiple h1 tags, which by the way is a problem, we can find that out very easily. We can find out h2 tags as well. We can find out the h2 character length as well, literally so much information. Now, another thing we can also do is if I go back to the first tab right here, you can actually click any URL and what it does, it brings out a more detailed analysis of that page in particular. So essentially what it does is it gives you all that information in just a different view, because some people like to view it in columns other than scrolling row by row, but essentially it gives you an immense amount of information.

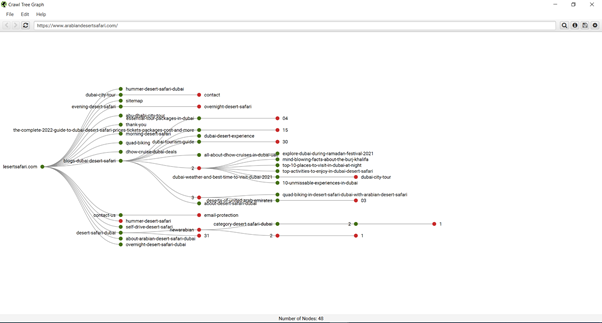

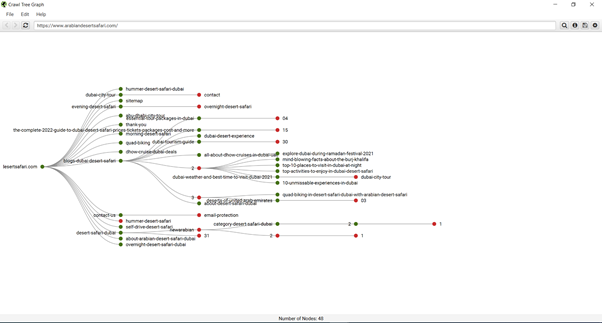

We can even go over to the right-hand tab right here and then go to the site structure. And what it does, it lets you know the structure of your website, literally folder by folder. We can even see our response time as well. So for those who don’t know what a response time is, it’s essentially the time in seconds to download that URL. You can also go up to the top and then go to your visualizations right here and go for maybe a crawl tree graph. And what that does is it generates these really cool visual representations of exactly how your website is laid out.

So you can see for yourself exactly how your website is structured, and what pages are linking to what pages. Now, this isn’t the only view you can see.

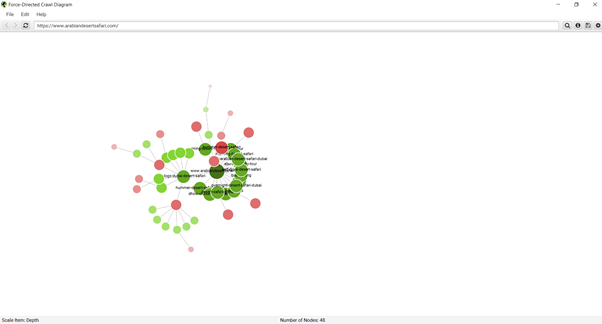

I go back into visualizations, I go for a force-directed crawl diagram.

You can see, we now get another visual representation of how our website is looking. So we have a homepage right here, a link to other internal pages like Evening Desert Safari Dubai, Morning Desert Safari Dubai, and Overnight Desert Safari Dubai, and then our internal Pages are linked with other pages and so on. These are really cool features, which are going to help you understand exactly how your website looks from a bird’s eye view, which of course is going to make it a lot easier for us when we’re trying to find problems on our website. Now, another great thing about Screaming Frog is that you can actually export all of this data just by clicking this export button. If you’re not a fan of looking at all this information within the tool itself, so within Screaming Frog, you can literally just export all of that data like so, and then review it in Google Sheets, on Microsoft Excel, or whatever is your preference. But essentially, I don’t want to get too carried away in this section as I’m going to go through all of this in great detail in its own category in the next sections. But essentially this is just a quick review or basic understanding to give you an overview and show you exactly what all of the data looks like, yes, it’s nice to see a big list like this on a website, but you might be wondering, well, what does the page title look like? How do I find that? Well, now you know. So now we’ve got the basics out of the way. I’ll see you in the next section of the blog, where we’ll go in to start diving into the first category of our SEO audit.

SECTION # 2 – TECHNICAL SEO PROBLEMS

In this section, I’m going to explain to you how you can check for multiple versions of a website, how you can carry out an XML sitemap check, and how you can test the speed of a website, so that how fast it takes for that website to actually open, and I’ll also be explaining to you how you can check if a website is mobile-friendly. Now that is very important, the last point, because for those who don’t know, Google actually uses a mobile-first index, meaning that when it indexes your site and it allocates it a ranking in Google, it is first and foremost all based on the mobile version of your website. So that’s a really interesting one for sure. So without further ado, let’s dive straight into the section.

SEO Check – Only One version of the Site is Browsable

This is a very important check that you need to do first for your website or whoever client’s site that you are working on is making sure that only one version of the site exists. So what do I mean by that? Whenever you carry out a search for a website’s URL, you can land on that website by searching for four different things. You could search for http://yourdomain.com, or http://arabiandesertsafari.com the client’s site, whatever site it may be. Or you could also search for http://www dot. You could do exactly the same thing but go for the secure version of the website. So https://yourdomain.com. And once again, you can have a www dot version as well with the HTTPS protocol.

So essentially in a nutshell, what you want to do is make sure that all of your websites have one main version and that all the other versions redirect to that main version. And for those who are wondering, what version should they use, should they go for HTTP, HTTPS, and so on and so on? Well, to make things simple for you, I’ll answer your question right here, right now. I would recommend that you use HTTPS, either www or non-www, as there is a slight ranking boost for sites that use SSL certificates.

In addition to receiving a slight ranking boost in Google when you use an HTTPS version, it also helps increase the conversion rate of your website, as when you have an HTTPS website, what you actually have is a little padlock symbol, which shows in the browser, and what that does is it helps build trust with your website visitors.

XML Sitemap Check

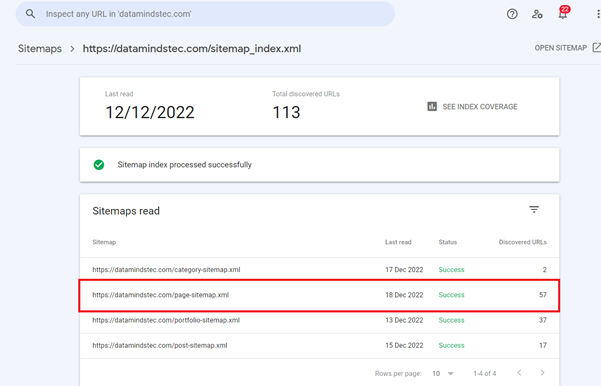

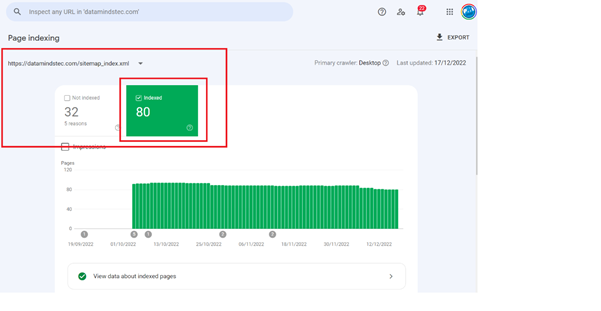

The next thing that you want to check is the XML sitemap. For those who don’t know what an XML site map is, it’s essentially a URL on your website that lists out all of the pages your website has. What we want to do is check that none of our important pages are accidentally being excluded from being indexed by Google, as what you’ll find is when you take on a new client, nine times out of 10, there’s already been a web developer playing around on the website and this developer has no experience or no idea about SEO, how it works. And what they end up doing is playing around with the Yoast SEO or Rank Math settings or the all-in-one SEO settings, depending on what SEO plugin the website uses. And what they end up doing is accidentally setting some pages to no index, meaning that Google isn’t going to index that page. For checking indexing you can also review your search engine console to check discovered URLs and Index Coverage and there must not be any issue highlighted in Red color.

Here you can see Indexed URL and other Not Indexed; in Not Indexed there are a lot of reasons like like excluded.

So if we click excluded, this one right here, you can see they have two different details coming up under excluded, and you can see it says, “Submitted URL marked as a no index.” There are exactly the ones you want to be looking at right here. I go into that, we can now see we have three different URLs on the client site, which is submitted as no index. So if I take this URL for example, and open it, you can see it’s https://mehranfoods.com/my-account/. It’s basically a page on the website where people can log into their accounts. But if I actually carry out a search for this in Google, so do I do site, colon, followed by the URL, you can see this page does not actually exist in the eyes of Google. So what does this mean? Well, it means that someone has deliberately gone onto this page and set this page as no index. Now, in this particular example of having a page about my account, this page does not need to be indexed by Google as this is a page just for the user. So for here, there are no issues whatsoever, but essentially what you want to do is just log in and make sure you don’t see any important pages set to no-index because of course, that is going to cause you some SEO issues.

So if I saw maybe the home page, the contact page, or one of the service pages set as no index, then it’s definitely something I want to be aware of and I want to go in and fix it. And of course, once you do actually fix those issues, if your site has any, just go ahead and click validate fix at the top, Google will then recall that page and make sure it is actually indexable. Now, one other thing which I just want to note is that if you go over to your excluded and you don’t actually see any option for submitted URL, mark no index, then what that means is your website or the client’s website has no issues and no problems whatsoever. So there’s nothing to worry about.

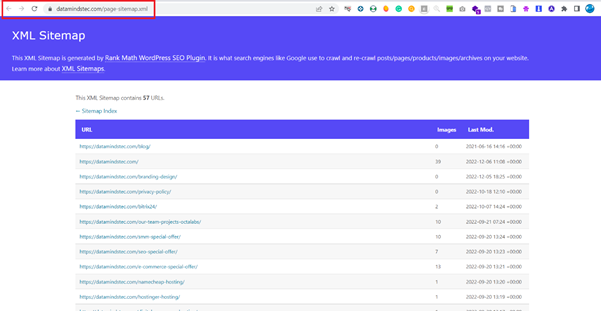

When you access this URL like https://datamindstec.com/page-sitemap.xml

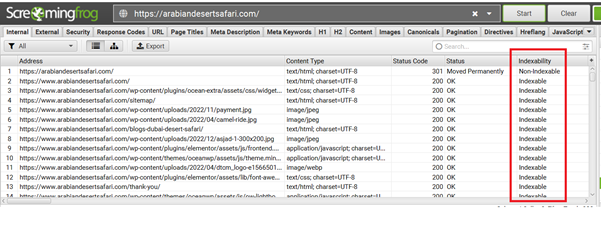

then you will see that all page links like the above are showing which you submitted to google manually or automatically through Rank Math; it doesn’t mean or guaranteed that Google has indexed these pages. These are pages list and links that you submit and advise google to index. So you must check there will be no missing links or unnecessary/invalid links showing. Your sitemap must be up-to-date you must check the update check on the google search console. After that, you will need to analyze in Screaming frog Indexability columns and their status must be indexability in the screaming frog tool. Like below

How do you check URL is indexed or not by Google?

There 02 ways to check URL indexed status:

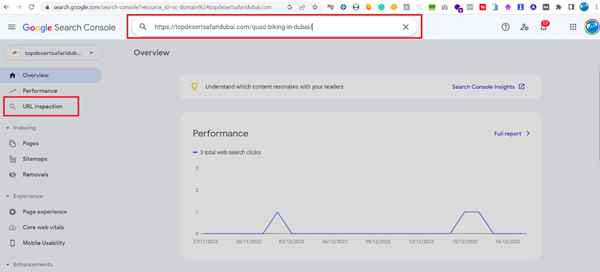

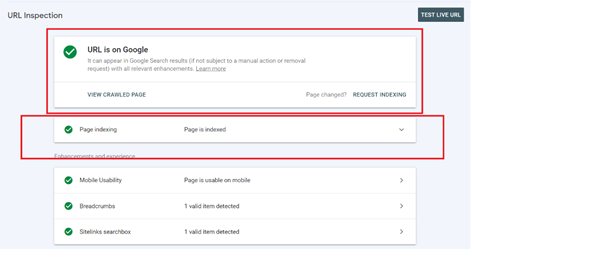

1). Inspect URLs through Google Search Console.

Just log in to Google Search Console and use the URL Inspection Tool, then you will get information about the status of this URL either is indexed or not.

If not indexed then you can submit an indexing requesting by clicking on Request Indexing.

and after a few days google confirm about indexing request and showed the status below.

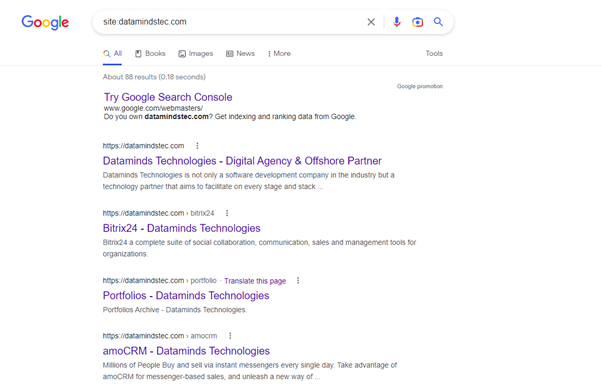

2). Search Website through Google Operator Site:

You can check your google index status through enter “site:” followed by the URL of your domain for instance, “site:datamindstec.com”.

then google will show your site URLs which are indexed and known by Google like below.

Remember when you search with Google operator it is showing sometime last updated date information. For updated information about index pages, you can check the Google Search Console URL Inspection Tool.

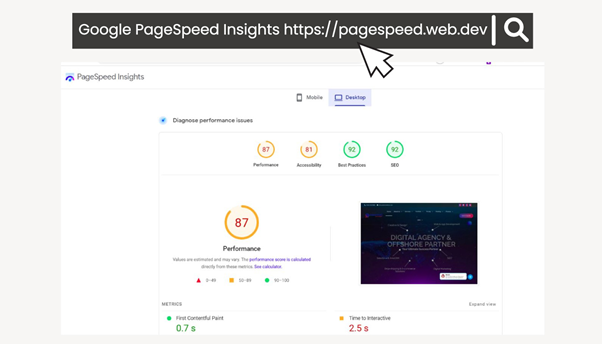

Check the Website Page Speed

The next thing you want to check is how long it takes for the website to load as of course, you don’t really want to be having a website that takes ages to load as it just results in a poor user experience and it can have a negative impact on a website’s rankings. There are a few different tools you can use to check how fast a website loads. My two favorites are GTmetrix

and PageSpeed Insights by Google.

A lot of the time people ask me, “Well, what tool is better? Should I use GTmetrix or should I use PageSpeed Insights?” It doesn’t really matter. It all comes down to preference. Both of them pretty much give you the same amount of information.

However, I personally prefer to use GTmetrix because it allows you to search a website based on a particular location. And as we work with a lot of international clients here in the US, Canada and UK, I want to make sure I check the website load time from if I was actually based in US or UK and not somewhere else in the world such as Australia for example. So GTmetrix is really, really good for that However only a limited location is available with the free version for all major server locations you need to subscribe.

When you check how fast a website takes to load, there’s one number that you need to have in the back of your mind, and that is Upto 4 seconds. Every single page on a website you are working on should load within four seconds. It’s important now to stress that it’s every single page and it’s not just your home page. So just because your home page loads in three seconds, it doesn’t mean that having a service page that takes 15 seconds to load is going to be okay. You want to make sure every single page on the site loads within four seconds. That’s super important for SEO or Google SEO-friendly websites.

This is recommended that you Test your website at three different times and calculate the average Sum of Results / 3 and it must be greater than 80% or at least B+.

How to Speed up the Website.

1). Reduce Image Size

You Make sure your images are optimized for your website or better to use Webp image format or convert them to webP format for making the website SEO friendly and loading fast. WebP WebP is a modern image format that provides superior lossless and lossy compression for images on the web. Using WebP, webmasters and web developers can create smaller, richer images that make the web faster. WebP lossless images are 26% smaller in size compared to PNGs.

2). Reduce HTTP requests

An HTTP request is made by a client machine or from your desktop/mobile, to a server where your website is hosted. The aim of the request is to access a resource on the server including CPU, Memory, and Hard Drive. Reducing the number of HTTP requests on your site will not only improve load times, but it will dramatically improve user experience overall.

In order to understand HTTP requests, first let’s understand what happens when we visit a website in a web browser or mobile.

- You visit a website using a web browser or mobile browser (Chrome, Edge, Firefox, or Safari)

- HTTP requests occur when your browser requests files to download.

- The website’s server returns all the files needed to load the website.

- Once all the files have been loaded, you can view and interact with the website or desired page.

Here’s how you can reduce HTTP requests:

- Combine CSS & Javascript

- Minify code (HTML, CSS, Javascript)

- Enable lazy load

- Remove unneeded images

- Reduce image file size

- Disable unneeded plugins

- Reduce external scripts

- Use a CDN

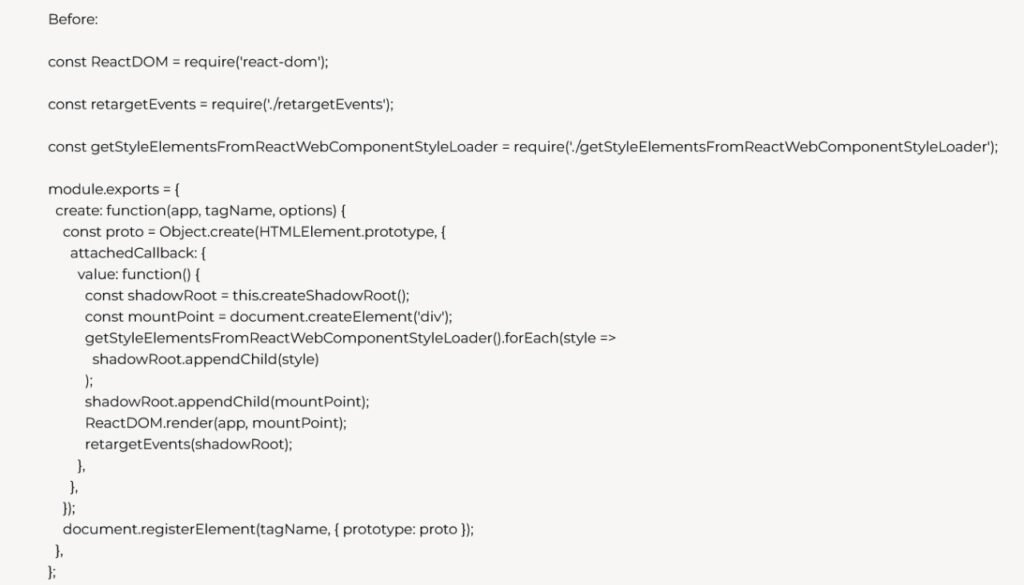

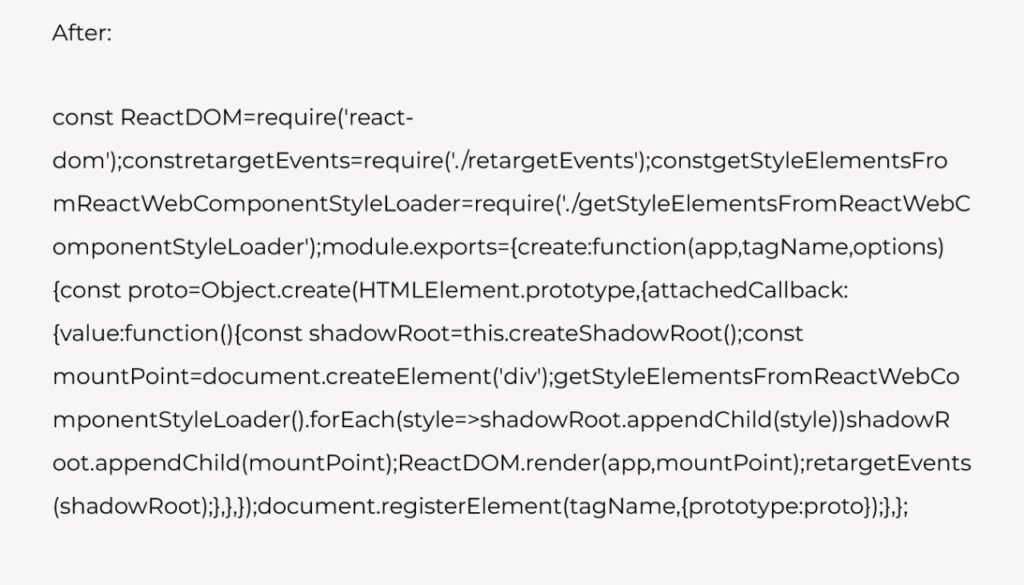

3). Minifying JavaScript and CSS files.

As your developer to minifying JavaScript and CSS mean writing code in one line rather than writing in multiple lines; like the below example:

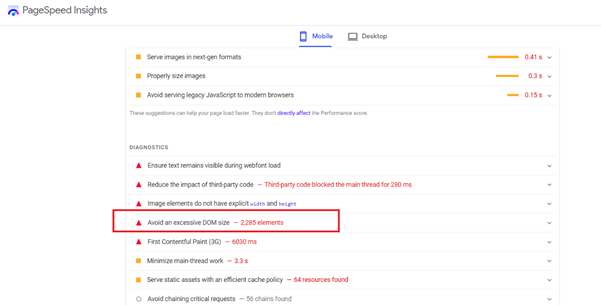

This is total development and the developer should follow standards for DOM (Document Object Model AKA web page). As per Google standards, an excessive DOM can harm your web page speed or performance. It is recommended that your web page have no more than 900 elements, no more than 32 nested levels deep, or have any parent node that has more than 60 child nodes. For DOM size recommendation you can use Google PageSpeed Insight:

4). Use a Cache Plugin

Actually, Cache Plugin creates a static version of your web pages that it delivers to your visitors. This Mean when a visitor returns to your site to view it again, they will see the cached version. There are a lot of cache plugins or third apps for cache plugins like WordPress WP Rocket. And in Shopify Booster: Page Speed Optimizer.

Check the Mobile Friendly.

Another thing that is very important is Mobile SEO Friendly Checkup which you’re going to want to check is if the website is mobile-friendly. So a website being mobile-friendly is so, so important. I can’t even stress how important it is just by using verbal words, but essentially if your website is not mobile-friendly, then you’re going to have so many problems when it comes to ranking it on Google. The main reason why is that Google actually has a mobile-first index, meaning that when they index your website, what they’re going to do is visit your website from a mobile device first, see how your website looks, and then determine a ranking for your website based on all of those outcomes. They then do exactly the same from a desktop computer, but mobile comes first. For checking the website is mobile friendly use the free tool for WordPress websites and the Google Official tool for all types of platforms.

SECTION # 3 – ON-PAGE SEO PROBLEMS

Checklist to follow for On-Page SEO Audit.

1). 404 Pages + Optimized URLs

2). Page Titles, Meta descriptions, Heading tags (H1 & H2)

3). How to Find Duplicate Content

4). How to Find Thin Content

5). How to Find Broken Links on your Website

6). How to Analyze a Site’s Internal Link Structure

1). 404 Pages + Optimized URLs:

When it comes to auditing a website from an on-page SEO perspective, the first thing you want to do is audit the website’s URLs. Now there are two parts to auditing a website URL. You have number one, which is finding dead pages. So this can include things such as finding 404 pages and finding any 301 redirects that a website has.

Finding Dead pages:

- Find any 404 Pages

- Find any 301 redirects

The second part of auditing website URLs is what we call optimization. Now, this only applies to new websites and sites that have no ranking in Google. So when it comes to optimization, you want to audit the website URLs to ensure they have four main things.

- Include your biggest keyword in the URL

- Don’t keyword stuff the URL

- Avoid repeating words

- The Shorter the better

1). Include your biggest keyword in the URL

Include your Main or Biggest keyword in the URL related to their page content relevancy.

2). Don’t Keyword stuff the URL

Keyword Stuffing means adding keywords without any relevance to page content. You want to make sure that doesn’t add unnecessary or unnatural keywords just add keywords related to the page title and content. I see so many people out there that literally just try and stuff as many keywords as possible in the URL. Please don’t do it. It looks unnatural, and you’re only going to do more harm to your website.

3). Avoid Repeating words.

Avoid repeating words as well and do not add unnecessary add keywords on any content page every content must natural, and content must be written naturally considering the keywords and topics.

4). The Shorter the better.

The shorter, the better. So don’t go out there and try and have the longest website URL possible, just really trying to stuff keywords in there. The shorter and the more concise your URL, the better it’s going to be for Google to understand exactly what your page is about

Just to clarify why the optimization only applies to new websites and sites with no ranking is because if you optimize an existing client’s website that is ranking on-page and on Google, and you notice that they’re not including the keyword in the URL, you don’t really want to go ahead and start changing that existing URL that already has backlinks pointing to it. As what that means is you potentially have the chance of losing all that SEO juice or traffic and power that that existing URL has.

In this case, you can 301 redirect the old URL to the new one, but it’s really not worth playing around with, especially if that page is already ranking really high in Google. You’re way better off optimizing the website from a different aspect, such as on-page SEO, for example.

So now we covered the theory behind URLs. Let me head over to Screaming Frog, and I’ll give you a real-time walkthrough to show you exactly how it’s done. The website I’m going to be using is this one right here. It’s https://arabiandesertsafari.com/ Once again, just to clarify, I do not own this website it’s my client’s website and we recently redesigned it.

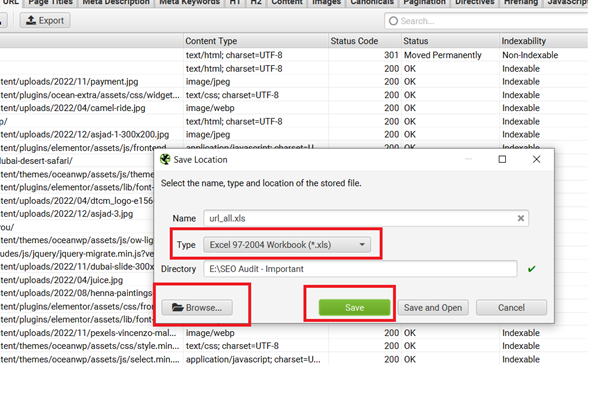

I’m just going to do is copy the website URL. I’m going to go over to Screaming Frog, and at the top, I’m going to enter in URL and click start to begin the crawl process. The crawl is now finished, and as we are auditing the URLs of this website, what we want to do is make sure we go over to the URL tab at the top right here. Simply give that a click, and it’s now going to show you all the URLs on that website.

So can see we get quite a bit of information. We have the address, the content type, the status code, the status, indexability, indexability status, hash, and so on and so on. It can be a little bit confusing to review right here in Screaming Frog. So what I actually highly recommend you do is you actually go ahead and click export, like so by clicking this button up here. And all you need to do is go for the type that you would like to export to save as. So I’ll select Excel, choose the path where to save, and then click save.

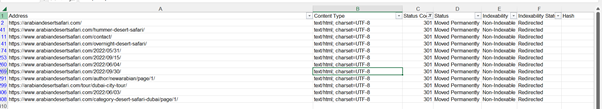

And then what you want to do is open that spreadsheet, which is actually what I have right here. So one thing which I’ve noticed already is that under column F under index ability status, we have the word they’re saying canonicalized. You can see it says right here and there. So what this tells me is that there are multiple versions of this website on Google. The reason I know that is because when you use the canonical tag on a page, you are basically telling Google to ignore this page as there is a different page on your website, which is the primary source of information.

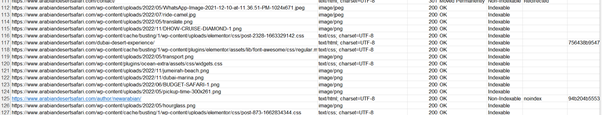

You can see this row right here that all URLs are on HTTPS so I have already explained and it’s very clear that this website has one version and it must be if there will be HTTP then you need to fix it. Further, you can see on row number 41 that there are 301 and non-indexable but it’s showing redirected so you need to make sure that 301 or non-indexable must be redirected. Further, you can see here 109 is also non-indexable but it’s marked as canonicalized meaning the duplicate content page must be non-indexable and mark with tag canonicalized.

Further, you can see on row number 125 that there is also showing non-indexable status on code 200. It means this URL not require to be indexed as its author profile page. For better working on the sheet and quick audit you need to filter rows with status codes 401, 301, and non-indexable and see the result then you can analyze rows and fix them accordingly to the requirement of the page for SEO.

2). Page Titles, Meta descriptions, Heading tags (H1 & H2)

Page Titles or Meta Titles for SEO Optimization

I am going to explain how to audit a website’s page titles. There are five main things that you want to keep in mind when auditing page titles which are following:

1). You want to keep the page title under 60 characters in length. Now, that does include spaces as well.

2). You want to get your keywords towards the front of the title tag, as words towards the front of the title tag, carry more SEO weight.

3). You want to write naturally. Now, hopefully, that is obvious, but trust me, you’ll be surprised at how many people go out here and write unnatural title tags simply because they’re trying to keyword stuff the title.

4). You want to include single words and variations too. For example, if you are targeting the keyword. Desert Safari Dubai, then another variation of the word consultant could be written like Best Desert Safari Dubai, desert safari in Dubai, Top desert safari Dubai tours, etc. these are all different word variations and are also called Relative Keywords.

5). You want to avoid repeated words. So this is the same logic that we apply to website URLs as well. So those are the five things you need to follow and check on the website. One thing very important is that all page titles are unique and have relevant content according to the page title or you can add multiple keywords or words in the title with a separation of ‘|’ or “-“. I mostly use “|” as a separator in the Meta Title of the page. Like Desert Safari Dubai | Desert Safari Tours | Best Prices Deal. This is exactly 60 characters. For character and word counting, you can use the free tool https://wordcounter.net/ or Microsoft Word’s “word count” feature. Last but not least use keywords in the title with proper research from Google Keyword research tool, Href, or Semrush.

Meta Description for SEO Optimization

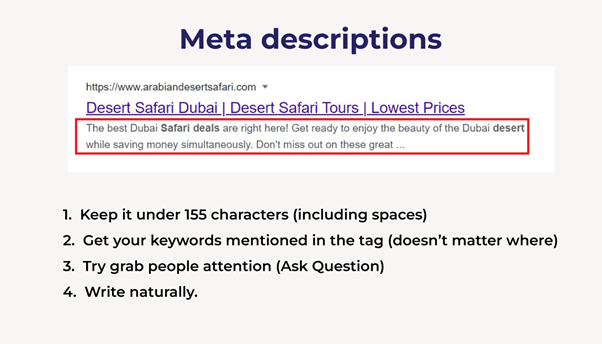

In this section, I’m going to explain to you how you can audit a website’s meta descriptions. Just to clarify, a meta description is a description you see below your website in the Google search results. here’s a meta description for Arabian Desert Safari home page. You can see it says, “The best Dubai Safari deals are right here! Get ready to enjoy the beauty of the Dubai desert while saving money simultaneously. Don’t miss out on these great. Or you ask questions like meta description. Actually, this is exactly how we can help your business.” So instead of me just showing you this and you’re trying to figure out exactly why this is a good meta description, let me go through four things that you need to bear in mind, which ultimately determines how you’re going to write a meta description to ensure it’s performing the best for your website.

1). You want to keep it under 155 characters. Once again, that does include spaces.

2). you want to get your keywords mentioned in the tag as well. Now, this is a little bit different in comparison to the title tag. As with the title tag you want to get your keyword mentioned at the front. However, when it comes to your meta description, it doesn’t really matter where you mentioned the keyword, you just want to make sure you get it included. Back in the day adding keywords in your meta description did actually give you an SEO boost. However, it is no longer a ranking signal. Well, you might be wondering, “Well, if it’s not a ranking signal, then why should I include my keyword in the meta description?” Well, the reason why you want to include it is if you look at my image, you can see the keyword I’m obviously targeting for my page is Software Development Company. It’s very clear from the URL and my title tag. And if you look at my meta description, when I search the word Desert Safari Deals have all been put in bold by Google.

Now, this doesn’t have a direct impact on my ranking, but what it does do is it makes my website stand out more in Google and highlights to users that my page is all about Desert Safari Deals, which is the main topic of my page. So when you do include the keywords in your meta description, it by default helps your page stand out and can actually help improve your website CTR, which is the percentage of people who click your website from the Google search engine results page. When a website has a higher CTR, it’s a very strong signal to Google that people are liking what they are seeing. So as a result, what does Google do? It bumps up the rankings of that page. So a long story short, definitely make sure you go ahead and include your keywords in your meta description.

3). try and grab people’s attention. I personally like to do this by the emphasis that you are the best and you are giving the best of the best or asking questions. Questions are also a great way to grab people’s attention. So, in my example right here you can see a meta description of the Arabian desert safari website it says, “The best Dubai Safari deals are right here! Get ready to enjoy the beauty of the Dubai desert while saving money simultaneously. Don’t miss out on these great” mean they are best of best for desert safari Dubai deals especially in 2023. So its meta description starts off with influence you. They can write “Are you looking for the best deals on Desert Safari Dubai in 2023? Look no further than Amigo Adventures Tourism” If you own maybe an E-commerce website selling gaming accessories, for example, you can start off your meta description saying, “Looking for the best gaming accessories?” Question. “We sell the most premium gaming accessories,” blah, blah, blah, blah. Adding a question in your meta description is a solid way to grab people’s attention.

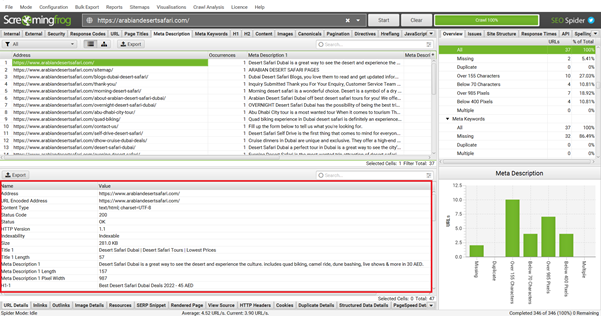

4). Write naturally. This applies to pretty much anything when it comes to SEO. You want to try and be as natural as possible and avoid trying to keyword stuff. So let me go back over to Screaming Frog and I’ll show you exactly how we could analyze this website’s meta descriptions. Once again, using the same website, Arabian Desert Safari. So, what I’m going to do is go over to the next one, which is meta descriptions.

Now as you can see, Actually Arabian desert safari website is SEO Optimized but when you analyze another new website you must check empty meta description pages and or repeating words/keywords in the meta descriptions then you must fix or avoid them.

Heading Tags (H1 & H2s) SEO Optimization

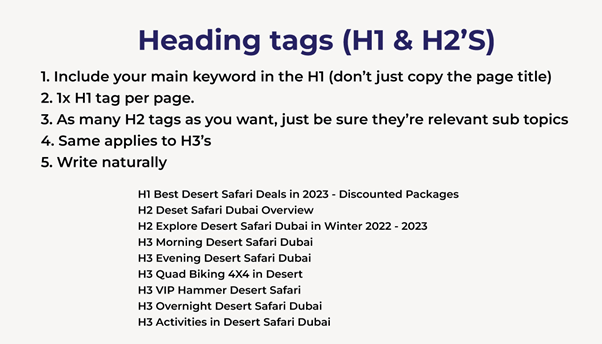

In this section, I’m going to explain to you how you can optimize a website’s heading tags, specifically the H1 and the H2 tag. There are five main things that you want to be aware of.

First thing is that you want to include your main keyword in the H1 tag. Now, don’t just copy the page title. I literally see so many people that go out there, they create a really, really good page title, and they can’t think of anything to use for the H1 tag. So what do they do? They literally copy and paste the page title and insert it as an H1 tag. Definitely do not do that. I will show you exactly what you can do in just a second.

Title: Desert Safari Dubai | Desert Safari Tours | Best Prices Deal

H1 Best Desert Safari Deals in 2023 – Discounted Packages

H2 Desert Safari Dubai Overview

H2 Explore Desert Safari Dubai in Winter 2022 – 2023

H3 Morning Desert Safari Dubai

H3 Evening Desert Safari Dubai

H3 Quad Biking 4X4 in Desert

H3 VIP Hammer Desert Safari

H3 Overnight Desert Safari Dubai

H3 Activities in Desert Safari Dubai

H4 Camel Riding Desert Safari Dubai

H4 Dune Bashing

H4 Face Painting

H4 Live Show

So on……..

The second thing you need to be aware of is that you should only have one H1 tag on a page. If you have a page of multiple H1 tags, then all you are doing is confusing Google. Your H1 tag is essentially the main heading of that page. If you have multiple H1 tags, then you’re telling Google that this page is about multiple different things. So definitely avoid that. Number three, you can have as many H2 tags as you want on your page, you just need to be sure that they’re relevant sub-topics of your H1 tag. Now the same applies to the H3 tags you have on your website, you can have as many as you want. They just need to be relevant subtopics of the H2 which comes before the H3. And finally, same as always, you need to make sure you write naturally when composing any of your H1, H2, or H3 tags like we compose H1, H2, H3, and H4 as per page content and title of the page. All headings are relevant to the content and use variations of keywords in headings not used exact match keywords.

How to find Duplicate Content – SEO optimization

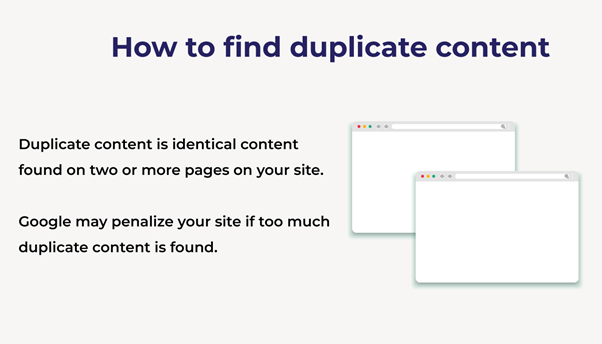

In this section, I’m going to explain to you how you can find duplicate content. Before briefing the process, let me just clarify exactly what duplicate content is for those who don’t know.

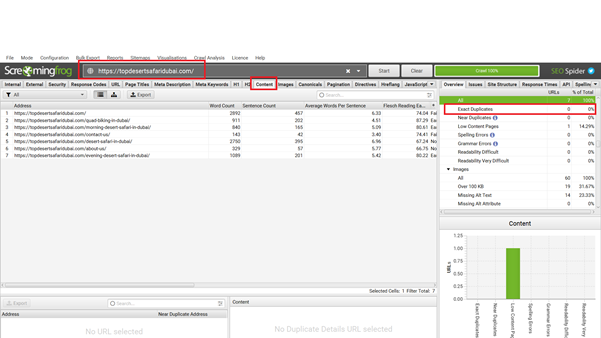

Duplicate content is identical content that is found on two or more pages on your website. So this is essentially where you have exactly the same content on two different pages. Now, the reason why we want to find duplicate content and fix it is, that if you have too much of it on your website, then Google may actually penalize your site. So how do we find it? Well, the process is very straightforward. We need to be using the same tool, which is Screaming Frog. Essentially what you want to do is go in and enter any website URL into the top like so. So you can see, for example, for this practice, I’m using the website https://topdesertsafari.com.

Once you’ve entered the URL and you’ve carried out a crawl on that site, what you want to do is make sure you go to the content tab right here. And what it’s going to do is give you an

overview of all the content on all the pages of that website. Now to find the exact duplicate pages on that site, all you need to do is go to the right-hand side under content and then click “exact duplicates” right here. And another way you can do that is by clicking this filter menu at the top, and then just going for exact duplicates. And just like that, you can now see Screaming Frog is showing us all the duplicate content pipe URLs across the whole website. So, we have eight exact duplicates in total. Right now, the website doesn’t have any duplicate pages there. If there are some duplicate pages, then you can see there with a percentage or by clicking on that track the pages and you should update content or remove anyone.

Now, one other thing, which I just want to note for those who have the paid version of Screaming Frog, is that you can tell Screaming Frog to show you near-duplicates as well. So these are pages that have similar content and do not exactly match identically. Now, this can be very handy to know as, of course, if lots of pages on your site have similar content, then that can also be a problem as well. So, to find those near-duplicates, all you need to do is go up to “configuration.” Now, this must be done before you carry out a crawl. And essentially what you want to do is go to content, then go to duplicates, and then check the box which says, “enable near duplicates.” So, as I said, this is only available if you have the licensed paid version of the tool.

This is a brand-new Screaming Frog account, which I’ve created just for the purpose of training and demo. But essentially, if you do have the paid version, just go ahead, and check this box, then you can put in a percentage for your similarity of being similar. So, I recommend you go for 90 and above, which is what the default is anyway. And once you’ve done that, you can simply go ahead and click “near duplicates” from the right-hand side. And then it will show you a list of all the pages on the site which had similar content as well. So that brings us to the end of this one. I hope you enjoyed it, and I’ll see you at the next one.

How to find Thin Content:

In this section, I’m going to show you how you can find thin content on a website. To clarify, thin content is content that has little to no value to the user. Typically, pages that offer little to no value are short in length. And, when I say short in length, I’m referring to the word count. The reason why we want to identify what pages on a website have thin content as if you look at this from Google’s point of view, so from Google’s perspective, ask yourself, “Do they really want to show a website on the first page of Google if that page has little to no value to the user?” Well, of course, the answer is going to be no. So, what we want to do is find all the pages on our site with thin content and then push them up by writing valuable and unique content. A lot of people always ask me, what is the exact number I should be looking for to determine if my page has thin content or not?” Well, unfortunately, there isn’t any fixed number, as this number is going to vary depending on what industry you operate in or what niche your content belongs to. However, as a guide, anything below 350 words can be viewed as thin content. But like I said, this isn’t any magic number, just use it as a guide.

So, how do we find these thin pages on a website? The process is very straightforward. You want to go over to Screaming Frog, enter a website URL, and then carry out a crawl. And then, once again, Now I am going to give dataminds Technologies Website so give the URL and once your results have loaded, you want to go over to the content tab, just exactly as we did when we were finding duplicate content. Once you’re in the content tab, you want to look at the first column right here which says, “Word count”. This is self-explanatory, but essentially what this does is it shows you the word count of that page for all the pages on the website. So, we can see the Needle Work & Kumash Textile pages have 217 & 218 words, but we have other pages like digital marketing, and e-commerce pages which have more than 500 words, so a decent number of words. We’ve got one of the other services and portfolio pages targeting Software Development companies and digital marketing agencies.

Compare low-content words with your niche competitors’ pages who are targeting your keywords and topics and update the content. You can see their content is a lot more thorough than their content, and as a result, these pages rank higher in Google as well in comparison to this page. So, it just goes to show that you really don’t want to have thin content on any website, as it’s going to hold you back when it comes to SEO.

So, that is a process overall. It’s very easy to do once you filter the pages by word count, as literally in just a few seconds you can see all the pages which are below 350 words. Then, you can go out there and push up all those pages in terms of content.

Now, just one final warning, or a bit of word of advice I should say, is that typically when you have a location or contact us pages on your website, maybe even an about us page, those pages are going to be shorter in word count in comparison to your main service pages, just by nature of the page. For example, we have this page right here which says, “Contact us”, it has less than 100 words on the page. But, if we open this page and go back over to Google, you can see it is literally just a page with a contact form, and this page obviously isn’t going to rank in Google. So, having thin content on this page isn’t that big of an issue. It’s only really your home page, main service pages, and your blog post that you want to look at when it comes to thin content.

How to find Broken Links on Your Website:

In this Section, I’m going to explain to you how you can find broken links on a website, also known as 404 pages. We’ve all been there ourselves in the past. We’ve gone onto Google. We’ve carried out a search for something we are looking for. We’ve clicked one of the results. The website opens only to find out that the page does not exist, and we get a big 404 message on our screen.

As you can imagine, this is a really, bad user experience. And Google does not want to have 404 pages in its index, as of course, it results in a poor user experience.

Now I’m going to explain to you how you can easily find all 404 pages a website has. There are two different ways you can use to find these 404 pages. The first option, you can use to find these pages is by using the Google search console. If you are working with a client and they let you know that they have a Google search console already set up on the website, then all you need to do is log into that console, and go to coverage on the index on the left-hand side. And if they have any 404 pages on their website, you’re going to see it under the details box right here. So, you can see it says submitted URL, not found, brackets 404. If we then click on this, we get a detailed list of exactly what the 404 URLs are. So, we can see we’ve got one right here for the product and another one for the products as well.

Now, remember, this is the actual 404 page. So, the page does not exist. If you want to find out what page links to this page, and the page has a broken link, then what you need to do is click on that page and then carry out an inspect URL. And what it’s going to do is basically inspect that URL in real-time, and then come back with some information and let you know exactly which page has the broken link. Now it is a little bit cumbersome. You must click here, then click that, then click this and inspect it, then go and find the page. Therefore, I do find it a little bit easier just to use a second option, which of course is going to be using Screaming Frog. So, for this example, I’m using a website called ironart.pk This is a new eCommerce website.

Now I am going to crawl a website using Screaming Frog. And if I wanted to find out all the 404 pages that this site has, all I need to do is go over to the fourth tab right here, where it says response codes. Once I’m on this page, what I want to do is go to the filter option right here and simply click client error 4xx, which of course is going to include all of our 404 pages. And now you can see this page has one broken link, which is good. And this is primarily because the person who owns the site was of course in SEO himself. But it’s likely if you are working with a client who doesn’t know SEO at all, they’re not going to have a lot of broken links.

So this is a broken link on this particular website or any product page. So just to double-check check I can copy that URL. I go back over to Google and then carry out a search for that. And as you can see, it says 404 pages not found. So, this page does not exist whatsoever. So to find out what pages are linking to this broken page, then what we need to do is go back and click this URL. And then we need to go to In Links right here at the bottom. And just like that, it will now show up to two pages, which are linking to this broken link. If this will be the case the page is deleted and no more exists on the website and Google knows this page then you just redirect this page to the relevant page within the website. But remember relevancy must be considered. If there is no page relevant page exists, then you just redirect to the home page. This is the last option. The status code for this is 301. This is not an issue. The mean 404 not found page redirects to another page.

How to Analyze a Site’s Internal Link Structure:

In this Section, I’m going to show you how you can analyze a website’s internal link structure. So, first things first, why would we want to do this? Well, when you have lots of backlinks pointing to a page on your website, you can give another page on your site some of that power and that link juice by adding an internal link to it. This will help that page rank higher up in Google without you having to build any links directly to that page, which is a massive win when it comes to doing SEO.

Internal links also help establish more relevancy across your website too. As a result, analyzing the internal link structure is a great way to understand what pages link to what, and it allows us to see if our internal links could be better optimized. The website I’ll be using for this specific video is going to be viperchill.com. This is the same website I used in the last video. So to see what pages have internal links, what we could do is literally click any URL, so maybe this one right here, and then go to in links down here. And then that will tell us what pages are linking to this specific URL. So we can see this page has free internal links coming from the homepage and it links to these blog posts. However, whilst this is good, it doesn’t really give you a good indication of the whole website overall as I have no idea what internal links this page has, because if I click that, it’s got a whole different list of internal links.

So instead of clicking the links here and then checking the in links, a much more effective and easier way you can analyze internal links on the site is by looking at this visually, so from a visual representation. The way you can do this is you go back to internal, and make sure you’re on the internal tab. Then what you want to do is go to visualizations at the top. Then you have two main options. You’ve got a crawl tree graph and you’ve got a directory tree graph. So I’ll show you both of them in my first section.

When people are using the directory tree graph, people always go forward a false directive version, which is this one right here. So let me go ahead and click that. Then I’ll go back to screaming frog. And I open the other one, which I typically like to use, which is the crawl tree graph. So let’s look at the force directory tree diagram first. And as you can see, it’s given us an overview of the whole website. Let me just zoom in. You can edit all these settings, so essentially each node you see is going to be a different page on the website.

Remember this is very important that internet linking is very important but only relevant content pages or sections must be linked so that readers can follow your link and google get a good user experience. Apart from internal linking, you must link outside relevant content pages so pass link juice like any sentence and word that need explanation then you link through Wikipedia or to the main brand or home website

SECTION # 4 – OFF-PAGE SEO PROBLEMS

In this section, I’ll be explaining to you how you can perform an audit of a website from an off-page SEO perspective. Typically speaking, when it comes to auditing a website, there isn’t that much that you need to check from an off-page perspective other than a website’s backlink profile. So that’s exactly what I’ll be doing in this section of this blog. This is going to be the shortest section of the blog, primarily because like I said, there’s only one thing that you really need to check. The bulk of the things which you need to audit on a website is all going to be from an on-page SEO perspective, as you would’ve seen in the last section. So, without further ado, let’s dive straight into this section.

Analyze a Website Backlink Profile for SEO Audit:

When it comes to auditing a website from an off-page SEO perspective, there are two things that I would like you to look at. The first is DA, which is the main authority. Now when analyzing a website, I always like to see domain authority of at least 20 and above.

And if you see a domain authority of 20 and above, it’s a very good indication that the website has had some decent SEO implemented on the site in the past. The second thing which I would like that you look out for is the number of referring domains a website has. And when it comes to number two, I like to look at at least 50 plus referring domains. It’s important that you focus on referring domains and not the actual backlinks as sometimes you can have the same website linking to your multiple times. So that website can give you five different backlinks when in theory, it’s only from one referring domain. So, referring domains are essentially links from unique websites. Therefore, you really want to focus on referring domains and not the actual backlink number.

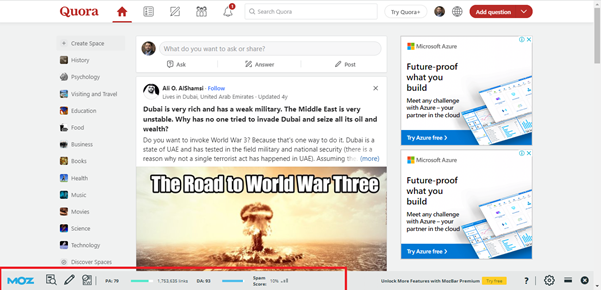

Let me brief you on a real-time walkthrough and I’ll show you exactly how you can check a website for both of these metrics. So for the first one, DA, you need to be using this free Chrome extension right here by MozBar. And essentially what it is, is a Google Chrome extension that you add to your browser.

And once you have it installed, you can literally go onto any website. So, let’s imagine this is a client I just took on and I want to figure out just how authoritative their website is. To give me a good indication of how much SEO work needs to be involved, I can simply go over to their website and then click the Moz icon right here in the top right-hand corner. Once I click that, you’ll get this little menu dropdown. And we can see this number right here, 93, which is DA, which is the website domain authority. So this website has a domain authority of 93, which is a really healthy number. One thing very important and Quora Q&A and article submission and many SEO experts are using Quora for their website traffic by submitting catchy articles and answers.

So once again, it’s a very clear indication to me that this site has had some SEO done on it in the past.

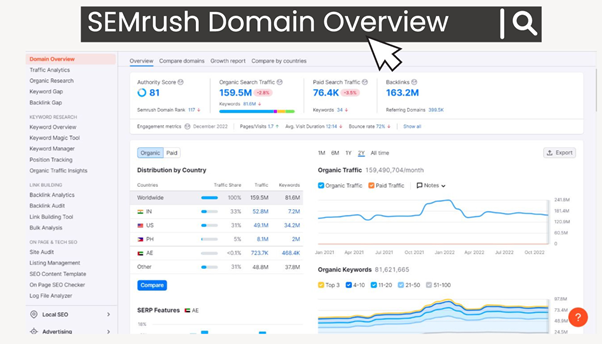

So, once you check the website’s domain authority, you can move on to number two, which is the number of referring domains. Now to check out how many referring domains a website has, you’re going to need to use an SEO tool. You have two choices you can use. The first is using Ahrefs and the second is using another tool called SEMrush, but Ahref is a little expensive tool so you also use SEMrush tool. if you are a new user, you can get access to the tool for completely free for seven days by using the free trial also there are limited features for free so let me use SEMrush for your ease let me just turn off this Moz Chrome extension, just so we don’t see it on these SEO tools. But essentially all you need to do is go into either one of these tools and plug in the website URL.

And for SEMrush, you can see on the main domain overview tab, we have this box right here, which says backlinks 515, and then below that, we can see the number of referring domains this site has. So, this site has 90 referring domains, according to SEMrush, which is almost twice what I recommend. So again, nothing to worry about whatsoever. If we go and check the same site on Ahrefs, just to see the difference, we can see that this site has 83 referring domains right here. So it’s about seven referring domains discrepancy between the two different tools. I typically always find SEMrush to be a little bit more accurate and find all the backlinks

and referring domains a site has. It’s the go-to tool we use at the agency these days.

So that is essentially the process in a nutshell. You really do not need to overcomplicate things when auditing a website from an off-page SEO perspective, as you’ll find when you’re auditing a website, most of the problems you’re going to find are going to be on the actual website itself. However, it’s always good just to carry out a quick off-page SEO audit, just to see how powerful and how authoritative the websites you’re working on are. And of course, if you find out the website you are auditing has zero referring domains and it has a zero-domain authority, then it gives you a heads-up that there’s going to be a lot of SEO work you need to do to get this site ranking on the first page of Google.

Analyze or Find out Spammy Backlinks:

In this short section, we just know about Spammy Backlinks sometimes we create a backlink on a site that is spammy or google blacklists this site, or sometimes your competitors tried to down you to create spammy backlinks against your site through auto-generated software or spammy site. You can find these spammy links through the SEMrush tool or MOZ by linking the domain profile and if you think the backlink is spammy then you just Use Google Disavow Tool to get rid of these links use the Google Official tool for Disavow links to your site and Copy paste all backlinks on a text file and then upload the file on the google disavow tool and submit however please be careful this is an advanced feature and should only be used with cautioning before submitting URLs read google important caution note.

SECTION # 5 – GENERAL SEO PROBLEMS

In this section, I’ll be explaining to you how you can audit a website to cover its general problems. What do I mean by general problems? Well, I’m referring to problems, which aren’t classified as either a technical problem, an on-page SEO problem, or an off-page SEO problem. General problems consist of analyzing a website’s organic traffic. That can be a very good thing to check out for sure, as it gives you a good idea of exactly how that website is progressing. And another thing that you want to check when it comes to general problems is going to be the brand visibility of that website. Brand visibility means trying to search on google with your Website Name like “Dataminds Technologies ” or “dataminds”, when it is showing result it means the website home page is recognized by google as brand

Analyze Organic Traffic Search for SEO Audit:

Another great quick thing that you can check when auditing a website is going to be the website’s monthly organic traffic. The reason why checking this is good is as you can very easily see exactly how much traffic that website is getting every single month. Now, when you review this traffic analysis on a large scale, so maybe over the course of a few years, you can very clearly see any patterns and it gives you a good indication of exactly how the site is progressing. You can very easily see if the site had any large spikes of increased traffic, or if the site has been penalized, which would show a massive decline in traffic. So, these are all good things to know when taking on a client and auditing a website, as once again, it gives you more information and more data so you can understand how challenging and how difficult it’s going to be to rank this site in Google.

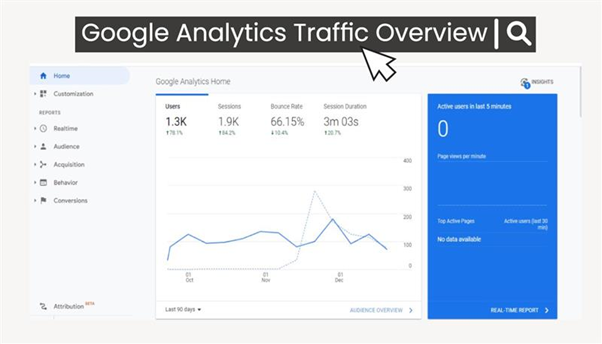

So, there are two different tools you can use to analyze a website’s traffic. The first, of course, is going to be Google Analytics. To use Google Analytics, a client will need to have their Google Analytics tag installed on the website, but essentially once they have the tag installed and you’ve got access to the analytical account, all you need to do is log in and go to acquisition and then go to overview and then scroll down and go to organic search right here.

Once you open that, you should see something pretty like this. The first thing you want to do is to change it from day to month because now we can’t see what’s going on. Let’s change this to month, like so. If you scroll up to the top, we can now see the timeframe of what the traffic is showing us.

As we can see, this site is recently launched in early 2022. It had a big increase in traffic. It seems to be a little bit flatlined down before November 2022. I might think that it is a desert safari Dubai niche website so there was season time so SEO traffic was up in November 2022, they saw a pretty decent increase in traffic from roughly about a thousand visitors at the peak down during the last 3 months. So been a decent amount of increase in traffic on this website in this duration. Then in early December 2022, you see it’s kind of going down a little bit and seems to be rising once again after 10th December 2022. So, this looks smooth, and nothing to worry about whatsoever. However, if you were seeing a massive decline in traffic, so maybe the chart was going up, up, up, up, up, and then suddenly, the traffic just completely tanked on the site, then that is a very clear indication that something has gone seriously wrong.

You can also check the website traffic of websites to that don’t have access by using a tool such as SEMrush. So, you can see, I plugged in my client’s website I’m currently looking at the website traffic for the last 3 months. Now, you may think, “Okay, this traffic is really flat. It looks good. Nothing is declining or inclining. So, overall, it’s pretty much neutral. Well, no. If you did think that you’d be wrong. Therefore, when you’re analyzing a website’s organic traffic, you really want to view it over a course of years and not just the last 3 Months or 12 months. So, as I said, when you apply this to an all-time perspective of the website, it gives you a good indication of exactly what is going on.

If you analyze the traffic of the website for the years and see a massive decline in traffic, there might be something wrong and your client might hire a new SEO company or consult with another SEO expert. Who knows what happened there, but at least you have the data and the knowledge as you go away and then ask them what happened and find out exactly what is the core problem for this website. So, therefore it’s a really good idea to check the website’s organic traffic. It’s going to give you more information and put you in a better position to maybe sell your SEO services or at least get a step closer to solving the problem.

For Organic Backlink or get traffic to your website sign-up here: Brand Push

This is all about SEO Audit. After following my guidelines, you can perform an SEO audit for any kind of website. But at the least but the least SEO is a game of patience, so it takes some time and years to rank a website, especially in highly competitive niches.

if you have any queries or questions feel free to comment or reach me at email yousuf@infotechabout.com.